New paper in Neurocomputing: Deciphering the transformation of sounds into meaning: Insights from disentangling intermediate representations in sound-to-event DNNs

2025-10-02

In neuroscientific applications of deep neural networks (DNNs), interpretability of latent representations is crucial. Otherwise, we risk replacing one unknown (the brain) with another (the network).

This new Neurocomputing article by Tim Dick, Alexia Briassouli, Enrique Hortal, and Elia Formisano, published shows how invertible flow models can:

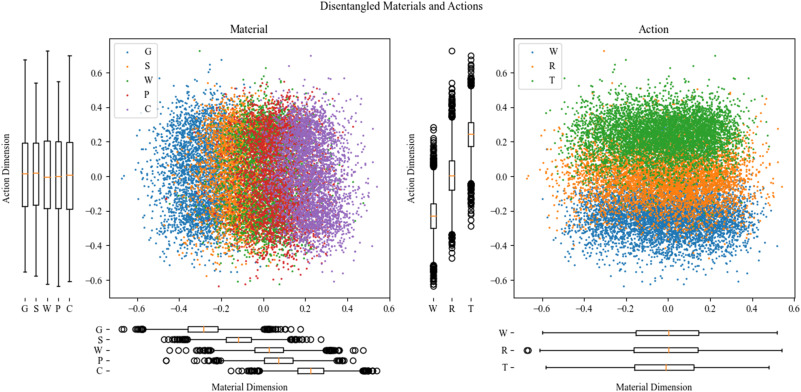

– Disentangle DNN latent spaces into interpretable dimensions (here: actions & materials in sound categorization networks).

– Enable systematic manipulations of these dimensions that predictably change the network’s outputs.

Although applied here to auditory networks, the method is generalizable and can extend to other domains where neuroscientists use DNNs as computational models.

Check: Data and code. and Custom flow model library Gyoza